In the hair loss industry, the phrase “science-backed” no longer means anything. Due to broad legal definitions of “hair loss” and “hair growth”, marketers have uncovered a repeatable way to manipulate their clinical trial designs to nearly guarantee good results for products that, on balance, probably won’t do much at all.

They do this by:

- Starting a study in September to produce false-positive hair regrowth due to hair cycle seasonality

- Jam-packing people who just quit minoxidil into a placebo group, so they shed lots of hair

- Picking study participants with temporary forms of hair loss that will self-resolve without a pill

- Advertising a “clinically studied” product, then bait-and-switching to sell an unstudied product

- Grouping vellus and terminal hairs together to force statistically significant hair count results

…and more.

In this guide, we’ll expose these tactics – by giving examples examples of big brands who engage in them. Then, we’ll reveal a framework you can employ to protect yourself from bad product purchases: understanding evidence quality.

Specifically, we’ll uncover:

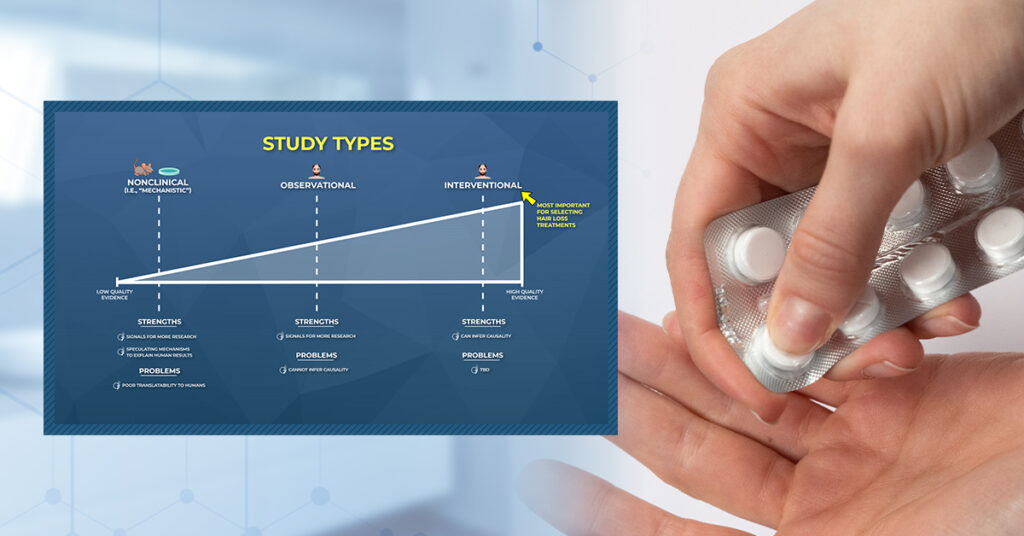

- The three major study types – nonclinical, observational, and interventional – and how marketers manipulate them

- Randomized, blinded, controlled clinical trials: what these are and why they’re still not enough to prevent fraud

- How to manipulate clinical trials to get any result you want: hand-select the wrong participants, truncate study durations, only report biased hair count measurements, bait-and-switch with product-market fit, and more.

Study Types

In hair loss research, we can expect to see three types of studies in peer-reviewed journals:

- Nonclinical (i.e., studies done in animals and/or petri dishes)

- Human – Observational (i.e., studies done on humans that observe behaviors over time)

- Human – Interventional (i. studies done on humans that test treatments)

Differentiating between study types is important, because not all studies are created equally.

- Nonclinical studies are great for exploring how treatments might regrow hair, but their results often don’t translate to humans.

- Observational studies can give us signals for new treatment options, but they also cannot infer causality.

- Interventional studies can tease out cause-and-effect, but if they aren’t randomized, blinded, and controlled, their designs can be manipulated to get almost any result a researcher wants (more on this soon).

Below we’ll dive deeper into each study type: what they are, what we can (and cannot) discern from them, and how marketers coopts our misunderstandings of the research to sell us hair growth products that, in some cases, are scientifically baseless.

1. Nonclinical (i.e., Non-Human Data)

Nonclinical studies are typically on petri dishes and animals. These studies can help us learn how diseases develop, show us ways in which treatments might work, and/or help establish ballpark drug safety profiles.

|

Nonclinical Studies |

|

| Definition | Studies done on petri dishes and/or animals |

| Strengths | Establish ballpark safety profiles for compounds, learn how treatments might work (i.e., mechanisms) |

| Problems | Poor translatability to humans, cannot infer causality |

Hypothetical Example – Drug Safety Testing

Say researchers want to study the effects of a novel drug on heart tissues. Before ever giving it to humans, they’ll want to establish a safety profile. Nonclinical research is one of the safest ways to do this.

So those researchers might setup a study on mice. They give those mice a range of drug doses and, after some time, sacrifice them and dissect their tissues to gauge any changes to their heart.

These results will help establish a drug safety and efficacy profile. Depending on the results, those researchers might recommend specific doses for humans to initiate a clinical trial (i.e., a study that’s done on humans, not mice).

Problems

Unfortunately, nonclinical studies don’t always predict what will happen in humans. That’s because humans are not petri dishes or mice. Our biology operates differently.

What’s more, the doses in mechanistic studies are often thousands of times higher than the doses given to humans. This makes nonclinical research so limited and, at times, even misleading.

The misapplication of nonclinical study results to humans is not always just a matter of a treatment “not working”. Sometimes, the consequences can mean life-and-death. Here is an example.

Translatability Example – Vitamin E & Selenium For Prostate Cancer: Good For Mice, Bad For Men

In the 1990’s, petri dish studies and animal models suggested vitamin E and selenium might help prevent the growth of prostate cancer. The signals were so encouraging that researchers decided to try these supplements on humans.

So, the government sponsored a 35,000+ person study scheduled to run for 12 years. Researchers were excited at the prospect of preventing prostate cancer with widely-available vitamins and trace elements – compounds that were not only inexpensive, but couldn’t be patented and sold to the public at obscene prices.

What a wonderful way to help prevent a cancer that affects nearly 1 in 8 men.[1]https://www.cancer.org/cancer/types/prostate-cancer/about/key-statistics.html#:~:text=stage%20prostate%20cancer.-,Risk%20of%20prostate%20cancer,rare%20in%20men%20under%2040. So, how did the study go?

At year seven, the study was halted. Why? Because a subset of men using selenium and vitamin E were getting the exact opposite of their intended effects: higher rates of prostate cancer. From the authors:[2]https://www.fredhutch.org/en/news/center-news/2014/02/vitamin-e-selenium-prostate-cancer-risk.html

Vitamin E alone raised the risk of prostate cancer by 63 percent in men who had low levels of selenium at the beginning of the study. Further, selenium supplementation raised the risk of a high grade cancer by 91 percent in men who had adequate levels of the nutrient at the outset.

In other words, the same treatment that helped mice, hurt men.

This isn’t a one-off phenomenon. A 2006 meta-analysis found that, among highly cited animal studies, when those interventions were later tested in humans, researchers got contradictory results 18% of the time.[3]https://pubmed.ncbi.nlm.nih.gov/17032985/

Note that contradictory results does not mean “no effect”; it means the opposite effect. The percent of studies showing some effect in mice but no effect in men is even larger.

The key lesson here: don’t use mechanistic studies to make treatment decisions – for your health, or for your hair. In many cases, you’ll get it wrong. In some cases, you’ll hurt yourself.

Yet this is exactly what people do – accidentally or not – in the hair loss industry. Hair loss forums are overridden with people trying experimental hair loss interventions, and justifying those with nonclinical and/or mechanistic studies.

These posts are often done in good faith, but also come detached to a thorough understanding of that intervention’s translatability humans, its true efficacy in humans, and/or any safety concerns. Here’s an example.

Hair Loss Industry Example – Reishi Mushroom Extract For Androgenic Alopecia

In this post, one Redditor asked if reishi mushroom extract might help fighting androgenic alopecia (AGA). They link to an article that claims reishi mushroom extract has been shown to inhibit 5-alpha reductase enzymatic activity in petri dishes, and thus might be an effective at lowering dihydrotestosterone (i.e., DHT) – the hormone causally associated with the balding process.[4]https://www.reddit.com/r/tressless/comments/ktbkw3/reishi_mushroom_for_hair_growth/

Unfortunately, there is no human data to corroborate that these findings translate to humans. So we don’t know if reishi mushroom extract helps or hurts. We also don’t yet understand if it’s safe in extract form.

For reference, keep in mind just how much testing a compound will typically undergo before moving to human trials:

On Reddit, most people are doing this unintentionally, and without malice. These people are often just intellectually curious and looking for help with their hair.

But what about companies selling hair growth products? Should they be held to a higher standard for misappropriating – or even obfuscating – hair loss studies for financial gain?

Hair Loss Industry Example – Carbon 60 & Copper Peptides for Treating Androgenic Alopecia

Recently, we reviewed a company called Aseir Custom – which is a natural brand selling a hair growth topical called Auxano Grow. The product contains a combination of copper peptides (Ghk-Cu) and Carbon 60 (C60).

In the company’s advertisements, their founders claimed that, “Auxano Grow stops hair loss and regrows hair.” On their website, they cited several scientific studies that, at face value, appeared to support the use of Ghk-Cu and C60 – all purporting their anti-inflammatory, anti-fibrotic effects.

But a closer look at the studies revealed something problematic:

- Nearly all of those studies were on petri dishes and animals, not humans.

- Of the few studies done on humans, nearly all of them tested these ingredients for wrinkle reductions, not hair growth.

- Of the two human studies testing hair growth, the C60 study showed no effect on hair regrowth, while the Ghk-Cu study (which also included other ingredients in its formulation) showed that 43% of consumers using that product reported poor results.

In other words, Auxano Grow’s advertisements over-emphasized the results of nonclinical studies on their product ingredients, while simultaneously downplaying study results showing that those nonclinical findings didn’t translate to human hair regrowth.

For what it’s worth, Auxano Grow is not the only company engaging in this marketing sleight-of-hand. As we’ll soon demonstrate, this is a systemic problem in the hair loss industry.

Key Takeaways: Mechanistic studies create signals that we use to conduct better research. But they cannot tell us if a treatment will work in humans. And this is why mechanistic data ranks very low on the hierarchy of evidence.

For data that we has a higher likelihood of translating to human outcomes, we cannot turn to petri dishes or mice. We have to start turning to real human data. And the first level of human data comes from observational research.

2. Clinical Studies (observational)

Observational research is often done on humans. It’s when researchers observe people in their natural settings, then explore relationships between their behaviors and their health outcomes.

For instance, observational studies might collect information about a group of people’s demographics, weight, eating habits, and lifestyle choices. Then, they’ll see if any of these factors relate to the development of certain diseases or ailments.

|

Clinical Studies (Observational) |

|

| Definition | When researchers observe people in their natural settings, then explore relationships between their behaviors and specific health outcomes |

| Strengths | Identify signals which can be further explored in interventional studies |

| Problems | Confounding variables, cannot infer causality |

This type of research is also known as epidemiology. And while observational studies are filled with problems, they’re still effective at generating signals to explore in better-designed studies – sometimes for health, and sometimes for hair.

Benefits

Hair Loss Industry Example – Tribes In Papua New Guinea Protected From Baldness

In the hair loss industry, we can thank observational studies for discovering that the hormone DHT was causally associated with the balding process.

Almost 50 years ago, researchers who visited islands in Guam and Papua New Guinea noticed that a relatively large number of men were born as pseudohermaphrodites. They had ambiguous genitalia at birth, but during puberty, began developing male genitalia. As adults, they also had normal musculature and testosterone levels versus other males.

Fascinatingly, these pseudohermaphrodites also never showed any signs of pattern baldness later in life. Unlike other men, these men retained their prepubescent hairlines, did not develop a bald spot, and never went slick-bald, even late into adulthood.

Follow-up studies revealed these men actually had a genetic mutation. They lacked the gene that produced an enzyme called type II 5-alpha reductase, which caused them to have low levels of something known as type II 5-alpha dihydrotestosterone (DHT). This led researchers to start exploring DHT-reducing drugs – like finasteride – for the treatment of AGA.

Fast-forward to today: finasteride is the gold-standard treatment for pattern baldness in men – all because of observational research that occurred 50 years ago.

Problems

That story is fascinating, and those findings were revolutionary. However, there’s an important caveat to the story. Those observational studies on male pseudohermaphrodites generated signals to further explore what causes pattern baldness. But those studies, by themselves, could not tell us what caused hair loss.

This is because, by itself, observational data cannot infer causality.

In other words, correlation does not mean causation. Just because two things are related does not mean one caused the other to change.

Example – Shark Attacks Don’t Cause Ice Cream Sales

Observational studies have found an extremely strong correlation between ice cream sales and shark attacks. Do ice cream sales cause shark attacks? No. There’s a confounding variable – hot weather – that causes people to buy more ice cream and go swimming in the ocean.

This perfectly illustrates the problem with observational research. By itself, it cannot be used to tease out cause-and-effect. Rather, those observations have to be further explored with another type of data (more on this soon).

Even still, this doesn’t stop companies in the hair loss industry from finding (or convincing) dermatologists to confuse observational data with causality – all to sell you hair growth products.

Even worse, many of these dermatologists make claims that aren’t supported by human data.

Hair Loss Industry Example – “Dairy Causes Hair Loss”

We recently googled, “foods linked to hair loss”. The top article is from the shampoo juggernaut, Pantene.[5]https://www.pantene.in/en-in/hair-fall-problems-and-hair-care-solutions/hair-fall-causing-food/

At face value, the article boasts signs of credibility: (1) it’s from a worldwide brand in the hair care space, Pantene; and (2) it’s written by a dermatologist. Both of these factors signal trust to consumers who are hoping to find evidence-based advice on what causes hair loss and what promotes hair growth.

The article also exposes website visitors to the Pantene line of hair care products: shampoos, conditioners, and more.

Given its #1 rank in search results, consumers tend to assume that the information conveyed inside the article must be accurate. So, let’s take a look at the article’s claims – and how they stack up to the science.

The article claims that “dairy causes hair loss”. The argument to support that claim is as follows:

- Dairy contains fat

- Fat raises testosterone

- Testosterone is a reason for hair loss

The article cites two papers to support the article. Neither paper mentions dairy as a cause of hair loss. Neither paper mentions fat as a cause of hair loss.[6]https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5315033/[7]https://www.researchgate.net/publication/333498018_Hair_Loss_-_Symptoms_and_Causes_How_Functional_Food_Can_Help In fact, both papers suggest the opposite: that deficiencies in essential fatty acids might cause hair loss.

Even still, let’s give the author and Pantene the benefit of the doubt. Let’s do our own research to see if any of these claims is true – starting with the idea that fat from dairy raises testosterone.

In actuality, there is no data to support this claim. This observational study found that, across dosing ranges, full-fat dairy consumption was not associated with any changes to testosterone.[8]https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3712661/

Moreover, this interventional study found the exact opposite was true – that full-fat dairy consumption reduced serum testosterone levels in otherwise healthy men.[9]https://pubmed.ncbi.nlm.nih.gov/19496976/

In conclusion, Pantene’s logic-leaping claims are not supported by their own references. They’re also not supported by observational or interventional data on full-fat dairy consumption.

And yet this article ranks #1 on search engines.

Key Takeaways. Observational research is useful to generate signals for researchers to explore in better-designed studies. But by itself, observational data cannot infer causality.

To truly tease out cause-and-effect, we need a different type of design: an interventional study.

3. Clinical Studies (Interventional)

Human interventional studies are designed prospectively. They don’t observe behavioral patterns of the past; they look toward the future. They have a group of people who start a hair loss treatment, then they observe what happens to those people as they use that treatment over time.

In this case, researchers are mostly curious about what happens to participants’ hair. Does it thicken? Does it get worse? Do the ratios of growing-to-shedding hairs change at all?

Moreover, prospective studies allow researchers to also get a better grasp on the adverse events, and if a participant reports any side effects after starting a treatment.

| Clinical Studies (Interventional) | |

| Definition | Studies done on humans that measure treatment outcomes prospectively (i.e., looking into the future) |

| Strengths | Can infer causality, can help determine efficacy and safety profiles |

| Problems | Can be manipulated depending on other study design elements (i.e., no randomization, no blinding, no controls; bias in participant selection; short study durations; bias endpoint measurements; etc.) |

In the hair loss industry, interventional studies are the only studies consumers should use to make treatment choices. Again, this is because nonclinical have poor translatability to humans, and observational studies are good at generating hypotheses and signals, but they can contain confounding variables and cannot tease out cause-and-effect.

Conversely, interventional studies can tease out causality. So, what does a good interventional study look like?

At a baseline, it must be designed properly. A good interventional study needs these three key features:

- Randomized (i.e., participants were randomly assorted into different groups, rather than hand-picked)

- Blinded (i.e., both researchers and participants don’t know who’s getting the treatment)

- Controlled (i.e., there’s a placebo group and/or a treatment-control group to compare against)

If an interventional study is missing just one of these features, the results can be manipulated to get almost any result the researchers want. That’s not hyperbolic. We’ll prove it to you below.

The best way to illustrate the importance of these features is to detail what they are, and what happens if they’re missing.

Below, we’ll even provide real-life examples of hair loss studies that lack these key features, and why their absence makes results interpretation so difficult – if not impossible.

Feature #1: Randomization

Randomization means that the hair loss patients inside of the study were randomly assorted into different groups: like the treatment group, or the group receiving a fake treatment.

Randomization is critical; it prevents researchers from sorting participants based on who they think will respond most favorably to a treatment.

|

Interventional Studies: Randomization |

|

| Definition | Study participants are randomly assorted into different groups |

| Importance | Prevents researchers from intentionally sorting participants in a way that influences results |

| If Absent | Researchers can sort participants to manipulate study results |

At face-value, this seems so inconsequential. Who cares if participants in a study are randomized into different groups? But here is why this is so important.

Problems (If Absent)

“Hypothetical” Example – Studying A Supplement For The Treatment Of Hair Loss

Say that we sell a nutritional supplement to hair loss sufferers, and we want to increase consumer trust by conducting a clinical study proving that our supplement regrows hair. How we would go about doing his to guarantee results?

It’s simple. Follow this exact framework for the study:

Step #1. Find 20 people for our study – half of whom just quit using the hair loss drug minoxidil 10 days ago.

Step #2. Put all of the people who quit minoxidil into our “control group”. That group will receive a sugar pill. Then, put all of the people who’ve never used any hair loss treatments into our “treatment group”. That group will receive our supplement.

Step #3. Wait three months, and then retest everyone’s hair counts.

All of the people inside our “control group” – i.e., the ones who had recently withdrawn from minoxidil – will be experiencing excessive hair shedding due to treatment withdrawal. This is a well-defined phenomenon known as treatment withdrawal-induced telogen effluvium. It’s temporary, and it’s at its worst around three months after quitting a hair loss treatment.

Just see this figure. The black and white circular dots represent minoxidil users, and the red vertical line represents the month in which the researchers withdrew them from minoxidil. From weeks 96 to 108, there is a dramatic and immediate decline in hair counts.

Since these minoxidil withdrawers were in our placebo group, we’ll now likely see a statistically significant difference between their hair counts (i.e., worse) and the users of our supplement (i.e., slightly worse or roughly the same).

Here is an illustration of what we might expect. The treatment group is our supplement; the placebo group is our sugar pill (taken exclusively by minoxidil withdrawers).

Step #4. Advertise the results of the study. After all, our results allow us to legally claim that our nutritional supplement “clinically improve hair loss” – even in a placebo-controlled clinical study.

This sort of result is only possible if randomization is absent.

If randomization is present, then those minoxidil withdrawers would’ve been randomly assorted and evenly distributed between the treatment and control groups. As they went through their withdrawal-induced sheds, we would’ve likely seen this play out in terms of equal hair count declines in both the treatment and placebo groups.

Under these circumstances, the above figure would’ve looked like this:

To some people, this “no randomization” example may seem conspiratorial, hyper-specific, and ridiculous. Unfortunately, it’s also 100% real.

It happened with the supplement l-carnitine, and in a study that is promoted by the company Oxford Biolabs.

Hair Loss Industry Example – L-Carnitine Supplement + Topical (Oxford Biolabs)

There is a supplement for hair growth supported by a study designed exactly like this: no randomization, and inclusion criteria that allowed for people to withdraw from treatments like minoxidil just 10 days prior to joining the study.[10]https://www.scirp.org/journal/paperinformation.aspx?paperid=96669

It’s on l-carnitine – the main active ingredient inside Oxford Biolabs’ supplements and topicals – a product line marketed to hair loss sufferers who are searching for all-natural hair growth.

Please note that just because the researchers setup this study (1) without randomization and (2) with inclusion criteria that allows for treatment withdrawers – it does not mean they did exactly what we just asserted (i.e., load up the placebo group with minoxidil withdrawers).

However, there was no participant randomization. That means cannot take this possibility off the table. That means that the results interpretation of this study is – unfortunately – impossible. The study cannot tell us much about l-carnitine’s effects on hair growth, because it was designed in a way that allowed for results obfuscation.

Key Takeaways. Randomization is when participants are randomly put into different groups – such as the treatment vs. placebo group. If randomization isn’t present, researchers can pick which participants get the treatment, and which don’t. If researchers also set up their study to include participants who recently quit using hair loss drugs – like in the case of the l-carnitine study – they can then sort all withdrawers into the placebo group. This will increase the difference in hair counts between placebo and treatment, and thereby manufacture statistically significant improvements to hair parameters for treatments that don’t actually have a positive effect.

Feature #2: Blinded

Blinded means that the investigators or the participants or preferably both groups have no idea who is receiving the treatment, and who is receiving the sugar pill.

|

Interventional Studies: Blinded |

|

| Definition | Researchers and/or participants don’t know who is getting a treatment |

| Importance | Controls for the placebo effect (participants) and subjectivity in results analysis (investigators) |

| If Absent | The placebo effect can obfuscate results; researchers might deliberately misinterpret benefit for treatments that have no benefit |

Blinding is important for two different reasons:

- Blinding participants is important because if a patient knows they’re receiving the treatment, they can often start thinking more positively – which actually can help produce results. This is a phenomenon known as the placebo effect, and it’s so powerful that in some cases, the placebo effect has been responsible for reversing some people’s cancers.

- Blinding investigators is important because if they know who’s receiving the treatment might treat those patients differently when interacting with them, or they might change the way they analyze their results in favor of producing an “effect” for the treatment they personally favor.

Let’s explore that latter problem in further detail, with a real-world example on a multiple sclerosis treatment.

Problems (If Absent)

Unblinded Bias Example – Multiple Sclerosis Study

In one study testing multiple sclerosis treatments, researchers sought to determine not only if an experimental treatment was working to manage the disease, but also what would happen when a subset of the investigators in charge of results analysis were “unblinded” when evaluating the patients.[11]https://n.neurology.org/content/44/1/16

So, they set up a study and, when it came time for investigators to measure results via patient behavioral tests, they told a portion of those researchers who had received treatment (i.e., they unblinded the researchers).

When those unblinded researchers ran their behavioral tests on patients, they reported that the experimental treatment outperformed the placebo. The blinded researchers reported no difference in the treatment versus placebo groups.

Same patients, same treatments, different results interpretations – all due to differences in subjective analyses. All that was needed to influence results was to simply let a subset of researchers know who was (and wasn’t) getting the treatment.

This is why blinding is so important. It removes this potential for subjective bias.

This same phenomenon can happen in hair loss research: if the researchers aren’t properly blinded, they might change the statistical tools they use in their analysis, or the way they analyze photos, all in favor of getting a result for the treatment.

Key Takeaways. Blinded means that the researchers, the participants, or preferably both (i.e., double-blinded) don’t know who is and isn’t receiving the treatment. If blinding doesn’t occur, participants can experience the placebo effect – whereby just the thought of knowing you’re using a treatment can lead to positive outcomes for that group, even if they’re not actually using a treatment. On the investigator side, if blinding doesn’t occur, they can change their approach toward results analysis. This occurred in a study on multiple sclerosis patients – whereby blinded investigators saw “no benefit” from treatment, whereas unblinded investigators saw “benefit” from treatment – despite the data each group analyzed being exactly the same.

Feature #3: Controlled

A controlled study basically means that researchers included a treatment group, but also another group to compare results against in the form of another approved treatment or a placebo group – think a sugar pill or a sham device – something that makes participants think they’re receiving the treatment, but they aren’t.

|

Interventional Studies: Controlled |

|

| Definition | Study can compare the treatment group against a placebo group (i.e. sugar pill) or treatment-control group (i.e., minoxidil or finasteride) |

| Importance | Controls for the placebo effect and hair cycle seasonality; allows researchers to compare treatment against FDA-approved options |

| If Absent | Increased risk of false-positive or false-negative results; no contextualization of treatment efficacy or safety vs. other treatment options |

Typically, the best-designed studies will have all three groups:

- The treatment (i.e., the treatment the researchers want to evaluate)

- A placebo (i.e., a sugar pill)

- A treatment-control group (i.e., already-approved hair loss treatments, like minoxidil or finasteride)

That allows them to discern the effects of a treatment against an imaginary treatment (i.e., the placebo group). It also helps them discern how effective their novel treatment is versus options already available to consumers.

In hair loss studies, control groups are critical, and for at least two reasons: they help control for hair regrowth that can occur due to the placebo effect and/or hair cycle seasonality – both of which can obfuscate study outcomes and create false-positive and false-negative results.

1. Control Groups Help Control For The Placebo Effect

Let’s say a group of researchers run a six-month study to test the effectiveness of a new hair loss treatment, but they don’thave a control group. After six months, they discover that their treatment significantly improves hair counts.

At face value, these results look promising. However, without a control group, we cannot know how much of these hair count increases is coming from other factors – such as the “placebo effect” – and how these results might compare against other hair loss treatments already available to consumers.

Now let’s reexamine this same graph, only this time, under the hypothetical scenario whereby researchers also included both a treatment-control group (i.e., finasteride) and a placebo-control group (i.e., a sugar pill).

All of a sudden, these results don’t look as promising.

- The treatment still shows some effect, but its relative increase in hair counts is smaller compared to the placebo group.

- The treatment is also significantly less effective than finasteride at improving hair counts.

Without these groups included in the study, these researchers might’ve presumed their treatment was more effective than it actually is. In some cases, studies like this have helped to clarify no effect for treatments that, in lower quality studies, showed early signs of promise.

Hair Loss Industry Example – Setipiprant

In 2012, a group of researchers published a landmark study suggesting that prostaglandin D2 was not only elevated in balding scalps, but that this substance also inhibited hair lengthening in mice. This created some plausibility that prostaglandin D2 might be a new treatment target for AGA.

Excitingly, there were already drugs in development for asthma that targeted this same substance: setipiprant. This oral medication helped to lower levels of prostaglandin D2 throughout the body, and early clinical trials demonstrated a good safety profile in men and women. Could this medication be repurposed for the treatment of AGA? Researchers decided to find out, so they set up a phase II clinical trial lasting one year to compare setipiprant against a placebo group and a treatment-control group: oral finasteride.

In the interim, hair loss forums were ablaze with people trying their own homemade regimens targeting prostaglandins. Some people shared impressive before-and-after photos on forums. Others faded away and never shared results. Many people shared ambiguous or negative results, and appeared confused as to whether their prostaglandin regimens were doing anything at all.

Then, in 2021, researchers finally published the results of their phase II clinical trial on setipiprant. The findings? Setipiprant fared no better at improving hair parameters versus placebo, and it significant underperformed versus oral finasteride.[12]https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8526366/

Without a placebo-control group, researchers might’ve looked at this study and claimed that setipiprant “stabilized” hair loss between weeks 0-24. This is true, but it’s also true that the placebo group saw the exact same stabilization, which means the results could’ve entirely been a function of the placebo effect or hair cycle seasonality (more on that later).

Moreover, without a treatment-control group, researchers wouldn’t have realized just how poorly setipiprant performed against a treatment already available to consumers, like finasteride.

Fortunately, this study was designed well enough to elucidate both of these findings, and rule out the possibility that setipiprant is a viable hair loss treatment for men with AGA.

However, not all hair loss studies share this same high-quality design.

Hair Loss Industry Example – Uncontrolled Cross-Sectional Study On Scalp Massaging (2019)

In 2019, our team published an uncontrolled cross-sectional study exploring the effects of standardized scalp massages for androgenic alopecia. Participant adherence spanned 1-48 months, and overall, the data suggested that standardized scalp massages improve perceptions of hair regrowth in a time-dependent manner.[13]https://link.springer.com/article/10.1007/s13555-019-0281-6

This type of study is limited in its design. Among a myriad of study limitations, one of the biggest issues is that the study is uncontrolled. In other words, there was no placebo- and/or treatment-control group to compare against when evaluating the efficacy of this intervention.

Therefore, all that can really be gleaned from this study is that there appears to be a “signal” that is possibly worth exploring in better-designed studies: specifically, studies that are randomized, blinded, and controlled. Without any control groups, we cannot discern the effects of this intervention against the placebo effect or hair cycle seasonality (more on this later).

These limitations were partly why we chose to publish this study as open access. In doing so, we could make our data – along with the massage instructions and video demonstration – 100% free to access for anyone with an internet connection. That way, if anyone wanted to try this intervention on themselves, they wouldn’t have to pay a dime to try it. This is also why, whenever we write or speak about this study, we also emphasize its limitations, and we discuss its findings within the context of the study’s limited evidence quality.

With that said, this level of specificity is not universal in the hair loss industry. Many other companies will publish survey-based research on their own proprietary blends or product lines, but rather than mention the limitations of their own research, they’ll advertise those results to consumers without that context and use those studies to justify premium prices for their products.

Hair Loss Industry Example – Uncontrolled Survey Study On ProCelinyl (Revela)

Another company in the hair loss industry – Revela – conducted a similar study on a serum containing a trademarked molecule called ProCelinyl. They published their results as a white paper – which is an internally published study that is designed to look like a real study but did not undergo peer review.

This survey study ran just six weeks (more on that later), and it did not compare against a placebo group or a treatment-control group. Even still, this did not stop Revela from advertising their product as an alternative to minoxidil, and then charging $80-$100 per month for the product.

Without comparing ProCelinyl to minoxidil, Revela cannot make these claims. In doing so, they’re not only misleading consumers, but they’re also risking violation of truth-in-advertising laws.

2. Control Groups Help Control For Hair Cycle Seasonality

Throughout the year, our hair will cycle through periods of accelerated shedding and growth. In the Northern Hemisphere, lots of that shedding happens in July and August, and it can reduce our overall hair density by 5 to 10%.

This is what’s known as seasonal shedding, and it’s a subclinical form of telogen effluvium. It’s driven by things we have a hard time controlling, like UV radiation from sunlight and changes to our circadian rhythm.

If it’s not already obvious, these cyclical changes to our hair counts pose a problem when conducting studies on hair loss treatments – particularly when a study runs shorter than one year and/or when a study doesn’t have a control group.

Hypothetical Example – Seasonality-Driven False Negative Results

Say that a team of researchers wants to discern the effects of a new hair loss treatment. So, they setup a study in North America, they begin their study in June, and they run their study for six months.

After six months, they bring in their participants and, to their disappointment, they see no changes in hair counts at month six versus month zero.

By itself, this might be considered a negative result. However, if those same researchers had included a placebo and/or untreated group, they probably would’ve seen a drop in hair counts for the placebo and/or untreated group. Why? Because in July and August, the groups not receiving any real treatment would’ve likely undergone a big seasonal hair shed.

In this case, the absence of a placebo and/or untreated group study makes the lacking improvements to hair counts from the treatment group look like a negative result. This is what’s known as a false negative. If those groups were included in the study, there would’ve been statistically significant differences between the treatment and control groups, and the treatment would’ve actually demonstrated efficacy.

Hypothetical Example – Seasonality-Driven False Positive Results

Conversely, if these researchers were actually dealing with a treatment that was truly ineffective, but they started their study in August and did not include any control groups, those researchers would’ve seen a natural rise in hair counts as their participants naturally recovered from seasonal-based shedding throughout the months of August through December.

This is what’s known as a false positive result: a result that looks promising, but with a better-controlled study, would’ve been revealed to have been caused by factors other than the treatment (i.e., the placebo effect, seasonal hair shedding, etc.).

In the hair loss industry, uncontrolled studies running for six months (or shorter) have nearly equal odds of producing false-positive or false-negative results. Unfortunately, six-month uncontrolled studies are rampant; they’re used to promote a myriad of products that are often popularized online, but have very little clinical support behind their efficacy or safety.

Hair Loss Industry Example – Brotzu Lotion (Trinov)

Brotzu Lotion, now called Trinov, is a product promoted to people with AGA and/or alopecia areata.

In 2016, hair loss forums were abuzz about an interview with Dr. Giovanni Brotzu – the inventor of the lotion and a well-respected scientist from Italy. In the interview, Dr. Giovanni claimed that in his clinical experience testing the product, Brotzu Lotion completely stopped hair loss and regrew miniaturized hairs.

Eventually, these claims were put to the test in a clinical trial. However, the clinical trial ran only six months, and it didn’t have a control group. In the trial, Brotzu Lotion did not improve hair thickness, and only slightly improved hair counts.[14]https://onlinelibrary.wiley.com/doi/abs/10.1111/dth.12778

Moreover, because the study ran only six months and was uncontrolled, researchers could not glean if the effects from those hair count changes were actually due to the product, or simply due to the placebo effect and/or hair cycle seasonality.

Key Takeaways. Control groups allow for researchers to see how a treatment compares against other hair loss treatments and placebo groups. This helps to control for hair count changes affected by (1) the placebo effect, and (2) hair cycle seasonality. In a six-month study, there are roughly equal odds that, in uncontrolled studies, positive hair counts are actually just a result of hair cycle seasonality. Therefore, control groups are critical to discern the true effect of any hair growth product.

Summary (So Far)

We’ve discussed the differences in nonclinical, clinical observational, and clinical interventional data. When it comes to hair loss, interventional data is the only reliable way to tease out cause-and-effect. Therefore, interventional studies are what we should prioritize when selecting our hair loss treatments.

But by itself, interventional studies are not enough to demonstrate efficacy of a hair loss treatment. These studies also need to be randomized, blinded, and controlled. If any one of these features is missing, it’s possible for researchers and/or companies to manipulate study outcomes, produce false-positive results, and/or make it impossible to assess the validity of a study’s findings.

Scientists are well-aware of the problems we’ve just elucidated, because these problems present themselves (in different ways) across nearly all fields of medicine. For this reason, scientists have developed a framework that can be used by both researchers and laypeople to evaluate the quality of studies. It’s called the hierarchy of evidence.

Hierarchy Of Evidence

The hierarchy of evidence is a pyramid that helps us rank all of the study types we just discussed. The higher up on the pyramid a study sits, the better-designed that study is, and less likely it is for there to be bias in its results.

Mechanistic studies (i.e., nonclinical) sit toward the bottom of the pyramid. For reasons we’ve already discussed, these studies come with the highest risk of bias, and the lowest likelihood of translating to clinical (i.e., human) results.

Observational studies (i.e., human observations) sit toward the middle of the pyramid. These studies can help create signals to investigate in better-designed studies. However, with a handful of exceptions, observational studies cannot infer causality. Observational studies also come with a risk of confounding variables and thereby false correlations, which elevate their risks of bias in results interpretation. For these reasons, observational studies by themselves should not be used to make hair loss treatment decisions.

Randomized, controlled studies (i.e., interventional human studies) sit at the top. These studies are, in most cases, the only way to establish cause-and-effect, and thereby treatment efficacy. And the best-designed interventional studies must be randomized, blinded, and controlled. This design dramatically reduces bias in results interpretation.

When it comes to using the hierarchy of evidence, here is a good rule-of-thumb:

- The higher up a study is, the more likely it is that the study results will match your real-world experience trying that intervention.

- The lower a study is, the less likely it is that the study results will match your real-world experience trying that intervention.

Put bluntly: petri dish studies showing reishi mushroom lowers DHT is not compelling enough evidence to invest $50/month on a reishi mushroom supplement for our hair. But an interventional study showing the same might be.

Hair Loss Industry Example – MDhair Equivocates Petri Dish Study Results To Humans With Androgenic Alopecia

Unfortunately, interventional studies on reishi mushroom extract for androgenic alopecia don’t exist. However, this doesn’t stop companies – like MDhair – from finding doctors to write articles falsely equivocating petri dish findings to humans with androgenic alopecia.

This sort of behavior exploits consumers’ misunderstandings of evidence quality. It might improve product sales, but in the long-run, it only serves to further erode trust in an industry that is already filled with bad actors.

If A Study Is Randomized, Blinded, & Controlled, Is It 100% Reliable?

Absolutely not.

Despite randomized, blinded, controlled clinical trials ranking toward the very top of the hierarchy of evidence, there are still ways to manipulate their results. In fact, this study design should really only act as a starting point for evaluating evidence quality on any hair loss intervention.

In the next section of this guide, we’ll reveal step-by-step strategies to obfuscate clinical trials that, at face value, appear perfectly designed. We’ll also reveal companies who have engaged in these study design “practices”. If you’re fighting hair loss, chances are that you’ve heard of these companies and maybe even tried some of their products.

How To Cheat A Randomized, Blinded, Controlled Clinical trial

The strategies we most often see employed to manipulate results from a clinical trial are as follows:

- Selecting study participants who have hair loss types that don’t match the consumers of the product

- Selecting study durations shorter than a single hair cycle

- Reporting total hair count changes, rather than terminal hair count changes

We’ll cover all of these (and more) in the following section – along with real-world examples of companies who’ve engaged in these exact practices.

Problem #1: Study Participants ≠ Consumers Of Product

When a company conducts a clinical study on their hair growth products, it goes without saying that the people inside that study should reflect the real-world consumers of the product. Pattern hair loss (AGA) is the type of hair loss for which most consumers seek treatment. So, most big-brand companies in the hair loss industry should be conducting their studies on participants with pattern hair loss.

Believe it or not, this doesn’t always happen.

Real-World Examples

Nutrafol & Viviscal

Before going further, there are four major types of hair loss:

- Androgenic alopecia (AGA), or pattern hair loss. This is caused by interactions between genes and androgens, specifically the hormone DHT. This is a chronic, progressive form of hair loss. Without treatment, it tends to worsen.

- Telogen effluvium (TE). This is caused by acute stressors (i.e., severe flus, emotional trauma, childbirth, etc.) and/or chronic ailments (i.e., micronutrient imbalances, hypothyroidism, etc.). This form of hair loss is always almost temporary; after identifying and resolving the underlying causes, hair counts typically improve within 3-12 months.

- Alopecia areata (AA). This is caused by an autoimmune reaction, and it partly driven due to interactions between our genes and environment.

- Scarring alopecia (SA). This is partly caused by autoimmunity, genetic predisposition, hormones, our environment, and/or our exposure to exogenous irritants and/or inflammatory substances.

Most people seeking hair loss treatments will be suffering from AGA. Therefore, most hair growth products sold over-the-counter (OTC) should target this consumers with this type of hair loss, as well as its known causes. This is because AGA offers the biggest market opportunity to hair growth brands.

But what if a marketing team’s goal isn’t to “prove” that their OTC product improves AGA? What if, instead, a company’s goal is only to “prove” that their OTC product improves hair loss? After all, “hair loss” has a broad legal and medical definition. It could constitute AGA, but it could also constitute hair shedding from temporary, self-resolving hair loss disorders like TE.

Here are step-by-step instructions for how a company would go about setting up this study:

Step #1. Register a clinical trial for your nutritional supplement. Design the study as randomized, blinded, and placebo-controlled.

Step #2. Only pick participants to be a part of your study if they don’t have AGA but do have self-perceived hair loss – with the investigators suspecting this hair shedding is due to stress and/or dietary deficiencies.

Step #3. Randomize your participants and give half of them a supplement you believe will resolve their dietary deficiencies in vitamin D, iron, vitamin B12, zinc, and/or any other vitamin, trace element, or nutrient associated with hair shedding.

Step #4. As long as you’ve provided instructions to all participants to not change their dietary or lifestyle habits during your clinical trial period, the half of participants receiving your nutritional supplement will resolve their nutrient deficiencies and, in doing so, see improvements to their hair counts. The other half will retain their nutrient deficiencies, and they won’t see improvements to their hair counts.

Step #5. Publish your results in a peer-reviewed journal.

Now you can legally claim that your nutritional supplement improves hair parameters in a randomized, blinded, placebo-controlled clinical trial – and you can use those claims in your advertisements and promotional materials.

Yes, it’s true that most consumers buying your product will have AGA – which is a different type of hair loss, with a different set of causes. Yes, it’s true that these consumers likely won’t benefit as much from your product. But if your goal is just to prove that your product “improves hair loss”, this flawed study design – and the results that follow – will now grant you legal rights to claim exactly this and advertise those results to consumers with AGA who won’t understand the difference.

So, are there any companies who have done this exact study setup?

Yes. Viviscal and Nutrafol. In fact, Nutrafol has done this exact study setup twice.

Nutrafol also conducted a study that included people with female pattern hair loss. But that trial was completed in 2017. We checked their website, we searched PubMed, and we combed through clinical trial databases. There is no evidence Nutrafol published the results of that one clinical trial anywhere.

Why is that?

If we were to guess, perhaps the results did not demonstrate efficacy for their supplement – probably because that study design happened to include consumers who were more reflective of their real-world customers, and thereby had a hair loss type that requires treatments beyond a nutritional supplement.

Vitamin E Supplements

In 2010, researchers in Malaysia published a peer-reviewed study testing the effects of vitamin E supplementation on hair growth for men and women.

The study was randomized, blinded, and controlled; it ran for 8 months; it used objective hair counting measurements; and the results were incredible: the group receiving vitamin E supplements experienced a 34.5% increase in hair counts.[15]https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3819075/ Those increases are beyond what most people would expect in an 8-month period from FDA-approved hair loss treatments like finasteride and minoxidil.

When hair loss forums caught wind of the study, many people started supplementing with vitamin E in hopes of replicating the results. Unfortunately, most people did not realize that the study on vitamin E was also fundamentally flawed: the investigators did not specific which types of hair loss the participants had.

Moreover, judging by the descriptions of the participants, it appeared some of the participants likely had telogen effluvium and/or autoimmune forms of hair loss like alopecia areata (AA):

Thirty eight male and female volunteers ranging from 18 to 60 years old who met the inclusion criteria were recruited into the trial. Volunteers had varying levels of hair loss, ranging from patchy loss of scalp hair to more severe loss of scalp hair. Hair loss must have been present for at least 2 months and the alopecia area could not have any visual evidence of new hair growth.

For these reasons, it’s no surprise why nearly all people on hair loss forums could not replicate the results. Hair loss forum users tend to have AGA, not autoimmune forms of hair loss like AA.

Moreover, most consumers did not realize that deficiencies in vitamin E are likely more prevalent in the study’s country of origin – Malaysia – than in other countries where this vitamin E studies became popularized. In this situation, the type of hair loss – and the potential deficiency associated with that hair loss – will not apply to the average person reading that study who then goes out to buy vitamin E supplements for their hair.

For more examples of this, please see our guide on telogen effluvium and our video on cognitive dissonance when selecting hair loss treatments.

Problem #2: Short Study Durations

When it comes to slowing, stopping, or partially reversing hair loss from AGA, it often takes months – and sometimes years – for an effective treatment to produce cosmetic results.

This is because hair follicle miniaturization – the defining characteristic of AGA – occurs in between hair cycles (i.e., right after a hair sheds, and during the point a which a new hair follicle is generating to replace the old one). Conversely, reversal of hair follicle miniaturization with effective treatments also requires hair shedding.

Under treatment conditions, when a hair sheds, its replacement hair may come back as much thicker. Even still, it will take 90-120 days after a hair sheds for a hair from the new hair cycle to poke through the surface of the scalp skin. In some instances, that new hair will need to resize again by undergoing another hair cycle.

This is why treatments like finasteride can take a full two years to produce their full cosmetic effects. Just see these hair assessments from a two-year clinical study on 1mg of oral finasteride daily in over 1,500 men:[16]https://pubmed.ncbi.nlm.nih.gov/9777765/

These long time horizons for hair loss improvements have been known since the 1990’s – starting with the first long-term studies ever published on both oral finasteride and topical minoxidil. Consumers’ lacking understandings of these results horizons is also what leads many people to inadvertently (1) quit treatments too early, and/or (2) quit treatments due to hair shedding – all because they’re under the false impression that these treatments aren’t working, when in reality, they just need more time to work.

Unfortunately, a lot of companies conducting consumer studies are choosing really short study durations, and they’re publishing results that don’t even come close to realistic timelines for evaluation.

Hair Loss Industry Example – ProCelinyl (Revela)

Revela was a company started by Harvard scientists dedicated toward finding natural hair growth solutions that worked. They trademarked a natural compound called ProCelinyl, and ran a consumer perception study to see the effects of this compound on hair growth.

The study ran for six weeks.

During this period, most consumers trying the product reported positive results: perceived improvements to their hair and reductions to hair shedding. Revela then published the results of their consumer study in the form of a white paper, accessible on their website:

Now, it’s entirely possible that this molecule helps our hair. However, we cannot come to this conclusion with a six-week study, especially one without a control group.

Keep in mind hair grows around half an inch per month. Six weeks equates to ¾’s of an inch of hair growth, which is not enough time to allow for significant hair cycling, which would create opportunities for hair density increases through improvements to hair thickness. In order to demonstrate these effects, we need months (if not years) of data.

Again, this didn’t stop Revela from advertising their serum as an alternative to minoxidil – despite never having a treatment-control in their own study.

In this case, Revela appears to have been rewarded for their misleading claims. In 2023, the company was acquired for $76 million – and just a couple years after starting the business.

Those are the incentives waiting for hair loss companies to perform this level of data obfuscation, which is why these problems (along with poorly enforced legal ramifications for bad actors) are likely to persist unless consumers begin to educate themselves about what they’re up against.

The bottom line: look for study durations that run, at a minimum, at least six months. Any study shorter than that is problematic.

Problem #3: Manipulated Measurement Endpoints

Beyond having a clinical trial that is randomized, blinded, controlled – with participants reflective of the target consumer and with study durations that run at least six months – the next thing we want a well-designed study to do is pick objective measurement endpoints for hair parameters.

In other words, we want investigators to track hair change through an array of subjective and objective metrics:

- Subjective

- Photographic assessments (i.e., before-after photos)

- Investigator assessments (i.e., researcher ratings of the participants’ hair changes)

- Participant assessments (i.e., participant ratings of their own hair changes)

- Partially Subjective

- Trichoscopy (i.e., taking bundles of hairs to pull out of the scalp and examine anagen:telogen ratios). This is partially subjective because researchers have to bundle and tug at the hair, which requires human labor and is thus not possible to 100% standardize.

- Objective

- Phototrichograms (i.e., micro-zoomed photos of scalp assisted by software to measure the number of total hairs, terminal hairs, vellus hairs, and hair thicknesses.

By far, the most objective tool for measuring hair changes is a phototrichogram. And the best-of-the-best studies will tattoo a temporary freckle into the scalp to establish a “target area hair count” and perfectly center their phototrichogram measurements before and after treatment. Here’s an example:[17]https://synapse.koreamed.org/articles/1045656

Problem #1: Subjective Measurements → Unclear Results

When reporting results of a hair loss treatment, some studies do not include objective data – such as a phototrichogram. Under these circumstances, researchers are left using subjective measurements, like before-after photos, data aggregations of investigator- and/or self-assessments, and/or trichoscopic hair pull tests.

These metrics are nice to have. In fact, they often tend to directionally align with phototrichogram data. However, by themselves, they’re subjective tools to assess hair parameters, and if they’re the only tools used in a study, that means the study’s results are entirely subjective.

Example – Assessments Of Before-After Photos

In this respect, subjective measurements can be manipulated – even unintentionally. Just see the following photoset.

Here, we kept camera distance, hair styling, and hair wetness consistent. However, we slightly tilted the head to change the angle of light hitting the crown.

The right photo gives off the appearance of thicker hair than the left photo, but there is no difference; these photos were taken seconds apart.

Therefore, if an entire investigation team relies exclusively on before-after photos to assess treatment results, but that team took photos with the same limitations as the one above, the analysis of results might produce positive (or negative) results when there aren’t any real results at all.

Problem #2: Deceptive And/Or Incomplete Reporting From Phototrichogram Data

Given the power of a phototrichogram, we would presume that it would be hard to “cheat” results from such a device. Unfortunately, it’s not.

Nearly all phototrichograms come attached with software capable of reporting the following metrics:

- Total hair counts

- Terminal hair counts

- Vellus hair counts

- Average hair thickness

Terminal hairs represent hairs that are thick enough to produce cosmetic differences in hair density to the naked eye – at least at scale (i.e., when enough of them are present).

Conversely, vellus hairs are thin, wispy, and barely detectable unless studying the scalp up-close with a dermatoscope and/or micro-zoomed lens. Vellus hairs are ≤ 40µm in thickness and ≤ 30 mm in length.

This photo (taken by Jeffrey Donovan) perfectly illustrates the differences between these types of hairs:[18]https://donovanmedical.com/

In interventional studies on hair loss treatments, vellus hairs are stimulated all the time. However, these hairs do not produce any cosmetic improvements to hair density; rather, they just increase total hair counts.

For this reason, well-designed hair loss studies will report improvements to hair counts in terms of terminal hair counts, not total hair counts. If they reported improvements to total hair counts, clinicians would often observe 50-200% increases in hair counts, while simultaneously, photographic assessments by both the patients and investigators would suggest far less impressive satisfaction scores.

In this respect, changes to (1) terminal hair counts, and (2) hair thicknesses across terminal hairs, are the two most important metrics of reporting for phototrichograms.

If these metrics are missing, it should be assumed that the investigators are reporting hair counts as total hair counts – as in, changes to the sum of vellus and terminal hairs. This is a problem, because it can create statistically significant increases to hair counts that look good on paper, but don’t reflect real-world results.

Hair Loss Industry Example – Copper Peptides (ALAVAX)

Companies selling copper peptides often cite a clinical study on ALAVAX to bolster their claims that these peptides can regrow hair.[19]https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4969472/ That study tested copper peptides (in addition to other ingredients) on participants with androgenic alopecia over a six-month period.

At the end of the study, users of ALAVAX reported roughly an 80% increase in hair counts. These results – like the vitamin E study mentioned earlier – appear to outperform expectations from the best hair growth drugs currently on-the-market.

So why isn’t everyone using ALAVAX for hair regeneration? And how come people trying copper peptides haven’t anecdotally reported results on par with that ALAVAX study?

A closer look at the study reveals that the investigators did not specify if those total hair counts came from terminal hairs, or vellus + terminal hairs. Upon reviewing some of the secondary endpoints – like patient satisfaction scores – a troubling narrative emerges: nearly 43% of participants trying ALAVAX felt they got poor results.

What could explain the large discrepancy in hair count increases vs. patient satisfaction scores?

More than likely, it’s that the majority of those hair count improvements came from vellus hairs. Again, these are cosmetically imperceptible, get stimulated all the time in hair loss studies, but unfortunately do not provide much value (cosmetically or otherwise) to the patients using the product.

Companies selling copper peptides tend to leave out this context.

Problem #3: Product Studied ≠ Product Sold

When reviewing a clinical study, it’s reasonable to presume that the product inside that study will be the same one sold by the company when visiting their website.

Unfortunately, in the hair loss industry, this presumption is not always true. Here are a series of examples.

Problem #1: Product Studied ≠ Product Sold In Online Store

Hair Loss Industry Example – Capillus

In our team’s company investigation into Capillus – laser cap provider – we discovered that they often advertise the results of a clinical study conducted on their proprietary laser diode helmet, which showed improvements to terminal hair counts versus a placebo device for females with pattern hair loss.[20]https://pubmed.ncbi.nlm.nih.gov/28328705/

At face value, this is a great signal.

However, when we visited their website, we realized that this clinically studied Capillus helmet is just one of many laser caps sold by the company. The clinically studied device is one of the highest-priced laser helmets they offer. However, the company also offers less expensive laser helmets – albeit with far fewer diodes.

This is a problem, and for a number of reasons:

- Capillus garners consumer trust and attention by advertising the results of its clinical study.

- When consumers visit the website, this cap is the highest-price option available for purchase.

- Capillus then sells other laser caps – that remain unstudied – at lower price points. These lower-priced caps likely sell more volume, but their effects on hair parameters remain unknown.

In our opinion, most consumers do not recognize these bait-and-switch marketing tactics. While this doesn’t technically break truth-in-advertising laws, it might harm consumers who believed they were purchasing a device with clinical efficacy – all because that’s what the original advertisement told them – but they instead purchased a device that remains unstudied.

Problem #2: Products That Don’t Meet Their Labeling Claims

Hair Loss Industry Examples

In the health industry, over-the-counter supplements and topicals receive very little regulation. Oftentimes, consumers are asked to trust that whatever is listed on the ingredients label is also inside the product, and at the specified volumes.

We decided to put these labeling claims to the test. So we had our members vote on a series of products for us to buy, send to a third-party laboratory, and spot-test for labeling accuracy with key active ingredients.

As of today, ~80% of products we’ve tested have failed at least one of their labeling claims. Some of those products have had near-complete misses – where some ingredients listed on the label are barely detectable in the finished products.

Problem #3: Delivery Vehicles Studies ≠ Delivery Vehicles Of The Product

There are many ways to “deliver” an active ingredient to help treat hair loss, such as:

- Orally (i.e., ingestion of the substance)

- Topically (i.e., applying the substance to the scalp)

- Intradermally, intravenously, and/or intramuscularly (i.e., injecting the substance into the body)

- Lathering (i.e., shampooing)

Unfortunately, companies in the hair loss industry presume that all delivery methods are created equally. They presume that the results of a study testing an oral medication will also apply to topical applications; they assume that the results of a study testing topical applications will also apply to lathering.

From a pharmacokinetic standpoint, there is no logic behind these presumptions.

Substances ingested orally often undergo first phase metabolism in the liver, where they biotransform into other substances, then travel throughout the circulatory systemic before reaching tissue sites like the scalp. For this reason, oral delivery is not always directly comparable to topical delivery.

Similarly, substances delivered topically often need to sit for hours on the scalp before they can appreciably absorb into the skin, where they can then have an effect on our hair follicles. Just see how many hours it takes for topical dutasteride to penetrate into the skin. After 12 hours, 60.8% of dutasteride still remains unabsorbed, and just 26.2% of dutasteride has actually entered into the skin:

For this reason, topical delivery methods are not equitable to shampoos. The amount of scalp skin contact time from a topical versus a shampoo is virtually incomparable: 12+ hours (topical) versus 1-3 minutes (shampoo). And while there are some active ingredients – like ketoconazole – that can exert their effects within a short time frame (and are thus appropriate for shampoo formulations), many of these ingredients are few and far between.

Even still, this doesn’t stop companies from selling hair growth shampoos containing saw palmetto, biotin, and caffeine – all while advertising language like, “Clinically proven ingredients.”

Yes, these ingredients are clinically demonstrated to improve hair parameters – in oral or topical delivery vehicles. They aren’y clinically proven in shampoos. We cannot presume the results from a topical study will translate to a shampoo.

Hair Loss Industry Example – Alpecin Shampoo

Alpecin made this exact marketing error when advertising their caffeine-based shampoo, which used a company-sponsored study on a topical caffeine (i.e., not a shampoo) to claim that its product could “help reduce hair loss”.

In the case of Alpecin, they actually got in trouble by advertisers.[21]https://www.independent.co.uk/news/uk/home-news/alpecin-caffeine-shampoo-banned-reduce-hair-loss-advertising-standards-authority-a8276951.html In the grand scheme of things, what they claimed was problematic and deceptive, but it also feels a far smaller offense than so many of the other practices of companies throughout this guide.

That doesn’t make what Alpecin did right, but it does contextualize just how problematic the hair loss industry has become, and why consumers often feel so disadvantaged: promised the world by products advertised on their newsfeeds, but with very little hair regrowth to show for their financial investments.

Final Thoughts

When it comes to evaluating hair loss studies, this guide only exposes the tip of the iceberg. There are so many other factors that we did not cover, such as:

- Study participant numbers

- Author conflicts of interest

- White papers versus peer-reviewed papers

- Journal quality

- Study replicability

- Effect sizes in meta-analyses

- Alignment with hair parameter outcomes and suspected hair growth mechanisms

…and so many others.

In the future, we intend to revisit these topics (and more) in future follow-ups.

For now, we hope your takeaway from this guide is that the hierarchy of evidence is our friend. Unfortunately, the hierarchy of evidence is also now co-opted by marketers who’ve found ways to manipulate clinical trial outcomes so they can sell more products.

This means we can no longer trust the results of a study simply because it is randomized, blinded, and controlled. We now need to look deeper into the study’s participants, duration, endpoint measurements, and its product-market fit – all to ensure the study results will apply to most real-world consumers of that product.

Whenever you come across a new product ad, a new reddit post, or a new article claiming a “breakthrough treatment” for hair growth – please use this guide to evaluate the true evidence of that product. In doing so, we hope you save tens of thousands of dollars in the long-term – all by avoiding bad product purchases and becoming a more-informed consumer.

Rob English is a researcher, medical editor, and the founder of perfecthairhealth.com. He acts as a peer reviewer for scholarly journals and has published five peer-reviewed papers on androgenic alopecia. He writes regularly about the science behind hair loss (and hair growth). Feel free to browse his long-form articles and publications throughout this site.

References

| ↑1 | https://www.cancer.org/cancer/types/prostate-cancer/about/key-statistics.html#:~:text=stage%20prostate%20cancer.-,Risk%20of%20prostate%20cancer,rare%20in%20men%20under%2040. |

|---|---|

| ↑2 | https://www.fredhutch.org/en/news/center-news/2014/02/vitamin-e-selenium-prostate-cancer-risk.html |

| ↑3 | https://pubmed.ncbi.nlm.nih.gov/17032985/ |

| ↑4 | https://www.reddit.com/r/tressless/comments/ktbkw3/reishi_mushroom_for_hair_growth/ |

| ↑5 | https://www.pantene.in/en-in/hair-fall-problems-and-hair-care-solutions/hair-fall-causing-food/ |

| ↑6 | https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5315033/ |

| ↑7 | https://www.researchgate.net/publication/333498018_Hair_Loss_-_Symptoms_and_Causes_How_Functional_Food_Can_Help |

| ↑8 | https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3712661/ |

| ↑9 | https://pubmed.ncbi.nlm.nih.gov/19496976/ |

| ↑10 | https://www.scirp.org/journal/paperinformation.aspx?paperid=96669 |

| ↑11 | https://n.neurology.org/content/44/1/16 |

| ↑12 | https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8526366/ |

| ↑13 | https://link.springer.com/article/10.1007/s13555-019-0281-6 |

| ↑14 | https://onlinelibrary.wiley.com/doi/abs/10.1111/dth.12778 |

| ↑15 | https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3819075/ |

| ↑16 | https://pubmed.ncbi.nlm.nih.gov/9777765/ |

| ↑17 | https://synapse.koreamed.org/articles/1045656 |

| ↑18 | https://donovanmedical.com/ |

| ↑19 | https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4969472/ |

| ↑20 | https://pubmed.ncbi.nlm.nih.gov/28328705/ |

| ↑21 | https://www.independent.co.uk/news/uk/home-news/alpecin-caffeine-shampoo-banned-reduce-hair-loss-advertising-standards-authority-a8276951.html |